Visual Blocks for ML is a Google visual programming framework that lets you create ML pipelines in a no-code graph editor. You can quickly prototype workflows by connecting drag-and-drop ML components, including models, user inputs, processors, and visualizations.

Why you should consider using Visual Blocks?

Developing and iterating on ML-based multimedia prototypes can be challenging and costly. It usually involves a cross-functional team of ML practitioners who fine-tune the models, evaluate robustness, characterize strengths and weaknesses, inspect performance in the end-use context, and develop the applications. Moreover, models are frequently updated and require repeated integration efforts before evaluation can occur, which makes workflow ill-suited to design and experiment.

- Simplifying Development: Visual Blocks aims to lower the development barriers for creating real-time multimedia applications based on machine learning. This is significant because it makes the development process more accessible to a wider audience, including those who may not have extensive coding or technical expertise.

- Experimentation without Coding: Visual Blocks provides a no-code development environment. This means you can experiment with machine learning models and pipelines without having to write complex code. This is especially valuable for designers, content creators, and others who want to harness the power of ML without delving into programming.

- Ready-Made Models and Datasets: The platform offers pre-built models and datasets for common multimedia tasks, such as body segmentation, landmark detection, and depth estimation. This feature saves users time and effort in finding, training, and fine-tuning models on their own.

- Versatility in Input and Output: Visual Blocks supports various types of input data, including images, videos, and soon, audio. It also allows you to generate different types of output, such as graphics and sound. This flexibility enables users to tackle a wide range of multimedia projects.

- User-Friendly Data Manipulation: The platform provides interactive data augmentation and manipulation through simple drag-and-drop operations and parameter adjustments. This feature empowers users to fine-tune their models and experiments with ease.

- Visual Comparison: Visual Blocks allows for side-by-side comparisons of models and their outputs at different stages of the pipeline. This is invaluable for assessing the strengths and weaknesses of different models and making informed decisions during the development process.

- Easy Sharing and Collaboration: Users can easily share their visualizations and publish multimedia pipelines to the web. This makes it effortless to showcase your work, collaborate with team members, or present your applications to a wider audience.

- Streamlined Communication: Visual Blocks provides a common visual language for describing machine learning pipelines. This makes it easier for designers and developers to collaborate effectively, ensuring that both technical and creative aspects are aligned.

What is Visual Blocks?

Visual Blocks is a visual programming framework for rapidly prototyping and experimenting with ML models and pipelines. Using Visual Blocks, you can mix and match premade ML models and pipeline components in a graphical editor. The editor renders your pipeline as an interactive node graph where you can connect components by dragging and dropping nodes.

Visual Blocks provides three core components:

- A library of nodes representing server-side models, in-browser models, and pre- and post-processing visualizers.

- A runner that runs the graph and displays the output of all or specific nodes.

- A visual editor where you can create your graph by connecting nodes with matching inputs and outputs.

How does Visual Blocks work?

Visual Blocks is mainly written in JavaScript. It leverages TensorFlow.js and TensorFlow Lite for ML capabilities and three.js for graphics rendering.

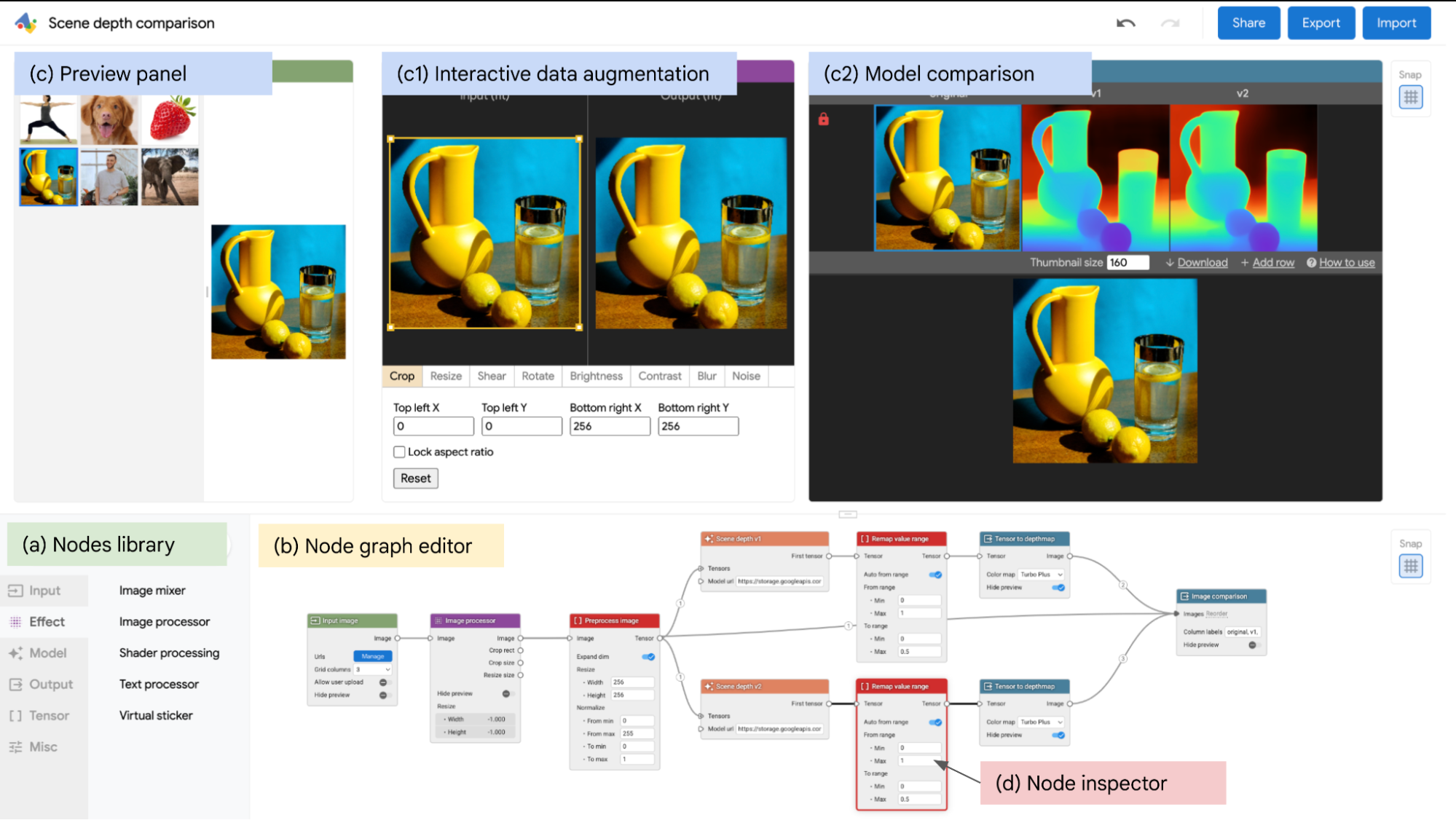

The Visual Blocks interface helps you rapidly build and interact with ML models using three coordinated views:

- A node library containing more than 30 nodes (including nodes for image processing, body segmentation, and image comparison) and a search bar for filtering.

- A node-graph editor that lets you create multimedia pipelines by dragging and dropping nodes from the library.

- A preview panel that shows pipeline inputs and outputs, provides immediate updates, and enables comparison of different models.

How do I get started?

Currently, Visual Blocks is available as a Colab integration. The integration lets you build interactive demos in Colab notebooks using prebuilt components.

Leave a Reply